What is Artificial Intelligence in Cybersecurity?

Artificial intelligence (AI) is reshaping how security teams detect, investigate, and respond to threats. By analyzing vast datasets, identifying patterns, and automating decisions, AI helps defenders operate with greater speed, precision, and scale – far beyond what traditional workflows allow.

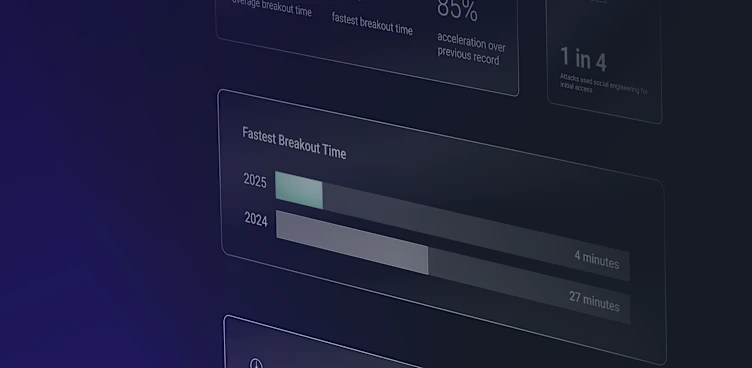

But the same capabilities that benefit defenders also offer advantages to attackers. AI enables faster reconnaissance, automated phishing, and adaptive malware that slips past traditional defenses. Even worse: according to ReliaQuest research, attackers can now achieve lateral movement within just 27 minutes of initial access. Such a short window means attackers can wreak havoc before defenders have a chance to respond.

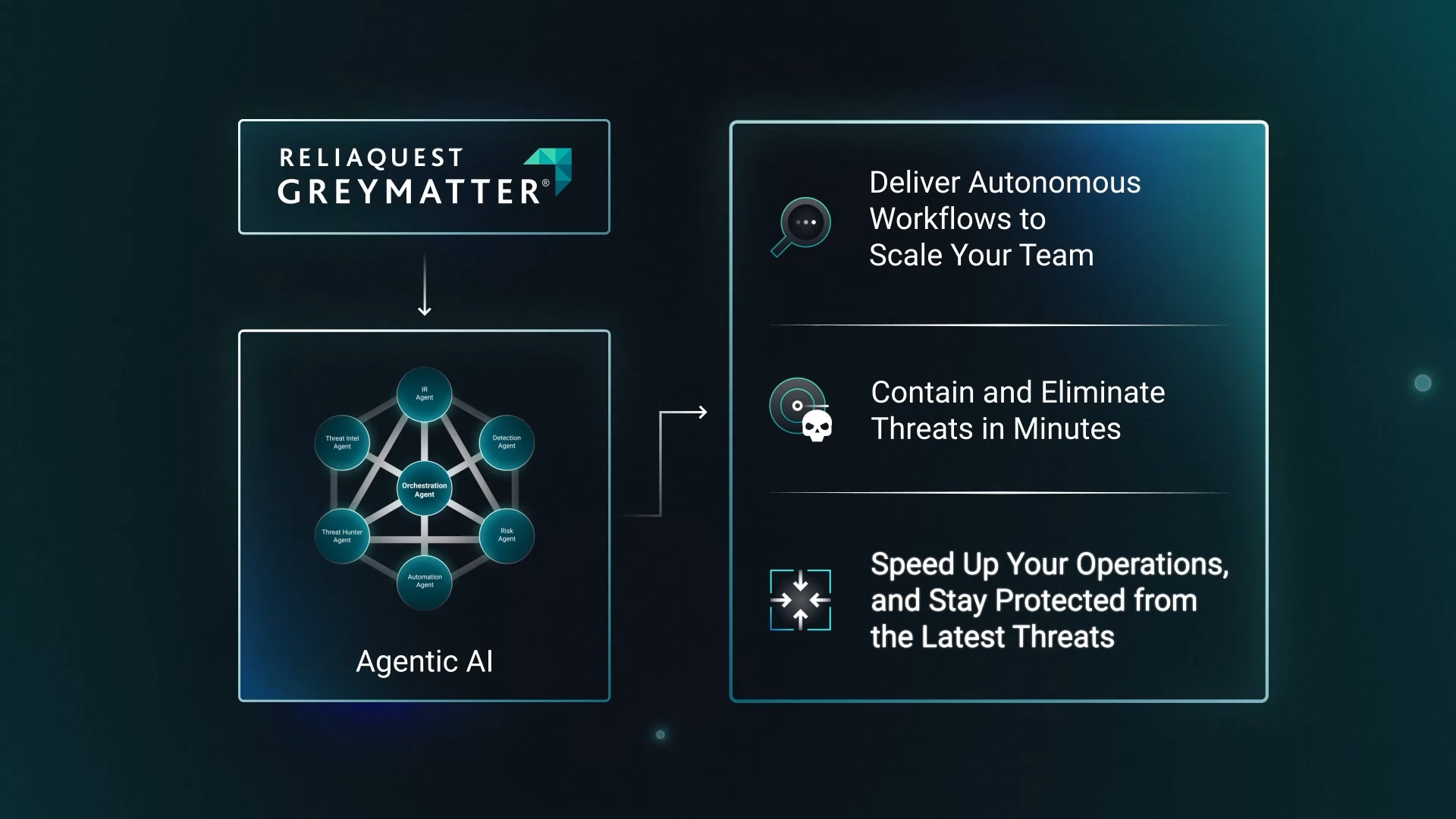

In response, security operations platforms are adopting various advanced forms of AI, including agentic AI. An agentic AI system can independently assess threats, take action, and continuously improve. Ultimately, in cybersecurity, AI isn’t just about efficiency; it’s about survival. AI is now a necessity for keeping pace with modern threats.

AI Technologies in Cybersecurity

However, it's important not to oversimplify AI in cybersecurity. AI is broad ecosystem of technologies that security teams can use to improve detection, response, and visibility. Some of the most common include:

Machine Learning (ML): Learns from historical patterns to detect anomalies or recurring threats.

Deep Learning: Mimics neural networks to analyze complex behaviors across large datasets.

Large Language Models (LLMs): Helps security teams quickly query systems or generate contextual explanations.

Many of these technologies are already partially embedded in current security stacks:

SIEM platforms increasingly use ML to cluster related alerts, enrich telemetry with threat intel, and reduce false positives.

EDR tools use ML to detect suspicious file executions, lateral movement patterns, or credential dumping.

Next-generation firewalls (NGFWs) use AI to analyze encrypted traffic, identify zero-day payloads, and apply dynamic threat prevention – even when predefined signatures aren’t available.

These technologies don’t operate in isolation; they work together to help security teams understand complex threats and respond automatically. In some security solutions, AI can now execute entire playbooks that once required manual effort. For example, when specific alerts arise, automated responses may include:

Blocking IPs

Resetting passwords

Blocking URLs

By automating these responses, security teams can reduce Mean Time to Contain (MTTC), limit attacker movement, and minimize disruption. Check out this video for a bigger-picture view of automating cybersecurity playbooks at each step - containment, investigation, and response.

The Benefits of Implementing AI in Cybersecurity

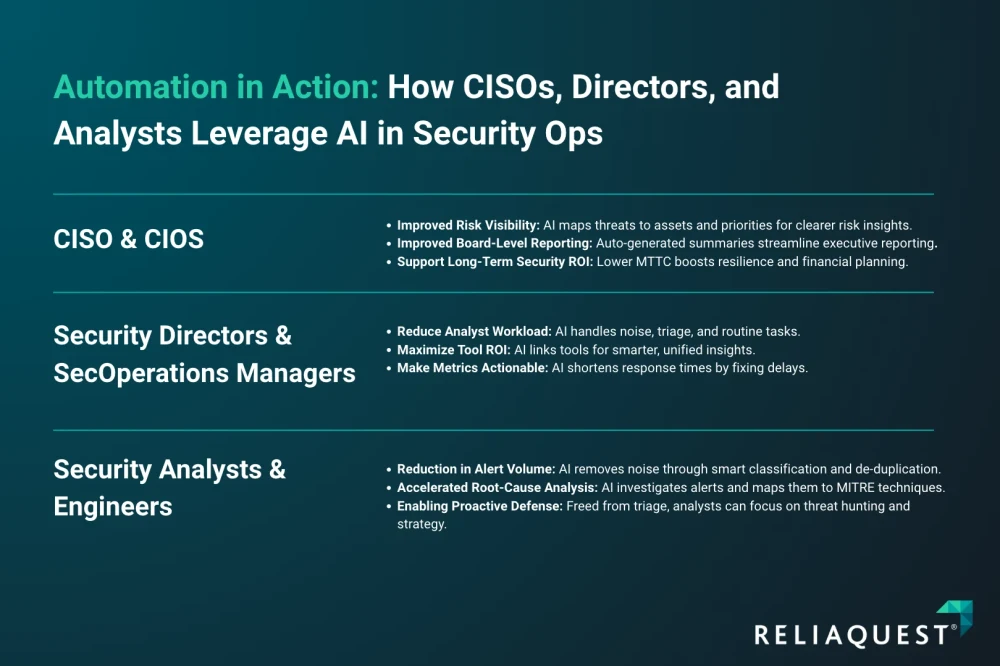

One thing is clear: as cybercriminals continue to tie AI into everyday attacks, security leadership needs to be just as quick to adopt AI into their everyday security measures. CISOs, CIOs, directors, analysts, and engineers alike can all benefit from using artificial intelligence to fulfill their respective duties.

Benefits of AI by Role

AI has become part of the day-to-day workflow for everyone involved in cybersecurity, from the CISO to the frontline analyst. Here’s how different personas benefit from operationalizing AI.

CISOs and CIOs: Driving Strategic Outcomes and Resilience

Improved risk visibility: Agentic AI provides high-fidelity metrics that help CISOs understand and communicate risk across business units – mapping threats to assets, identities, and business priorities.

Improved board-level reporting: AI enables faster, more accurate security reporting by auto-generating summaries of alert trends, incident types, and time-to-respond metrics.

Support long-term security ROI: By reducing MTTC, AI directly supports business resilience and financial planning.

Security Directors and SOC Managers: Efficient, Scalable Operations

Shrink the Tier 1/2 Workload: Agentic AI filters noise, triages alerts, and handles routine investigation and containment. That means managers can scale security operations without needing to scale headcount.

Optimize tool utilization: AI-driven platforms integrate with existing SIEM, EDR, and ticketing tools, correlating data across them and maximizing their combined value.

Operationalizing metrics like MTTC and Mean Time to Detect (MTTD): AI makes these timelines actionable by surfacing bottlenecks and gaps, and remediating many without human input.

Analysts and Engineers: Focus, Context, and Proactive Workflows

Reduction in alert volume: AI-driven classification and de-duplication save analysts from wading through countless redundant or useless alerts.

Accelerated root-cause analysis: AI agents proactively investigate alerts, collecting relevant logs, identifying affected assets, and mapping activity to MITRE techniques.

Enabling proactive defense: With time freed from repetitive triage, analysts can expend more effort on high-value tasks like threat hunting, red teaming, and automation tuning.

Security Benefits of AI in Cybersecurity

For everyone in the security team, AI’s real value lies in how it strengthens security posture – not just by saving time, but by enabling better decisions, faster containment, and continuous adaptation.

Minimizing Time to Detect and Respond

Attackers move fast. Faster than traditional tools or human analysts can keep up with. AI-driven defense compresses timelines across the detection and response platforms by:

Continuously analyzing telemetry for emerging attack patterns

Enriching alerts with relevant context from identity, endpoint, and threat intel sources

Automatically prioritizing and grouping related alerts to avoid duplicate work

This helps security teams respond faster and better, reducing MTTD and MTTR with greater confidence and consistency.

Correlating Data Across the Ecosystem

Security teams rely on a complex stack of SIEMs, EDR tools, firewalls, identity platforms, and ticketing systems. AI bridges these silos.

Instead of forcing analysts to pivot between tools manually, AI-driven SecOps platforms connect the dots, linking events across disparate sources into a cohesive, actionable picture.

This cross-platform correlation enhances visibility, reduces alert fatigue, and improves the signal-to-noise ratio across the board.

Continuous Improvement

Unlike static automation, such as predefined playbooks, AI improves the more it’s used. Advanced SecOps platforms can now:

Refine detection models based on historical incidents

Learn from analyst input to fine-tune triage decisions

Adapt to infrastructure, user behavior, and application changes without reprogramming

The result? The system becomes more effective with every investigation, producing fewer false positives and surfacing more relevant risks over time.

How is AI Used for Cybersecurity?

It’s important not to think of AI as a bolt-on to existing workflows. AI fundamentally redefines how detection, investigation, and response work across the security lifecycle. In traditional SecOps environments, teams rely heavily on predefined playbooks, rule-based alerts, and manual triage. However, these approaches cannot scale against modern threats.

Agentic AI transforms this model by introducing autonomous decision-making into every phase of the threat lifecycle. Let’s look at how that changes the Threat Detection, Investigation, and Response (TDIR) process.

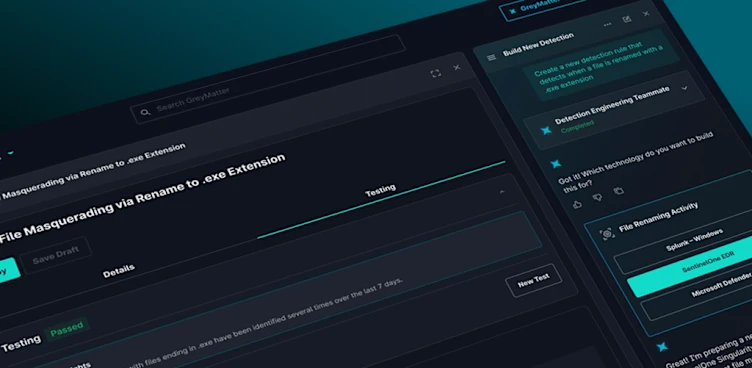

Enhanced Threat Detection: From Signatures to Signals

Traditional detection relies on matching known indicators or triggering static thresholds, both of which can miss novel or subtle threats. AI-based detection systems, however:

Analyze behavior, not just signatures

Correlate activity across users, endpoints, and cloud infrastructure

Identify low-and-slow attacks, such as credential misuse or lateral movement, that span days or weeks

What’s more, by continuously learning from your environment, AI tailors its detection logic to what’s normal and, crucially, flags what isn’t.

Investigation: From Manual Pivoting to Instant Context

Security teams spend massive amounts of time gathering context: pulling logs, tracing user activity, and checking threat intelligence. AI reduces that burden by:

Enriching alerts with identity, asset, and threat intel metadata

Grouping related alerts into a single, high-context case

Building timelines and surfacing MITRE techniques automatically

This reduces triage, reduces guesswork, and helps analysts get to the root cause faster.

Response: From Static Playbooks to Autonomous Action

Traditional automation requires predefined workflows – playbook steps that security teams must build, test, and update manually. AI changes this by:

Triggering action based on dynamic context, not just static conditions

Taking autonomous steps like isolating assets, resetting passwords, or disabling access

Adjusting containment decisions based on asset criticality, user role, or previous actions

These capabilities make AI particularly effective for Tier 1 and Tier 2 alerts, where speed matters most and context is limited.

Filtering Noise, Prioritizing What Matters

Alert fatigue is a huge problem. Part of the problem is that many alerts security teams have to deal with are irrelevant or duplicated. AI tackles alert fatigue by:

Consolidating duplicate alerts and classifying threats with greater accuracy

Filtering out noise and elevating high-priority incidents for human attention

Learning from analyst responses to improve future prioritization

One ReliaQuest customer reported an 81% reduction in alert noise and triage burden after deploying agentic AI across their stack.

How are Threat Actors Using AI?

However, as noted, AI isn’t just transforming defensive operations; it’s transforming the attacker’s playbook too. Today’s adversaries are adopting AI to scale attacks, evade detection, and accelerate their timelines. As a result, threats are faster, more convincing, and harder to contain.

Here’s how attackers are leveraging AI in the wild:

Automated Reconnaissance and Social Engineering

AI enables cybercriminals to automate OSINT (open-source intelligence) gathering. These tools can scrape the web for employee data, job titles, email patterns, and even social media activity to build detailed profiles. These insights fuel highly targeted spear-phishing and social media campaigns that mimic real interactions with uncanny accuracy.

In some cases, attackers are even using generative AI to craft context-aware emails and impersonate internal tone and language. This makes traditional spam filters and user training less effective at catching these scam attempts.

Phishing Kits Powered by Generative AI

AI-powered phishing is one of the most dangerous developments in cybercrime. Researchers have observed attackers using tools like Gamma to generate realistic, brand-faithful login pages – for instance, for Microsoft SharePoint – that are difficult for even trained users to distinguish from legitimate ones.

Unlike older phishing kits that relied on static templates, generative models can adapt in real time, creating custom pages for specific targets, including company logos, employee names, or unique pretexts. This level of personalization increases the success rate of credential harvesting.

AI-Enhanced Malware and Code Generation

AI is also making its way into the malware development lifecycle. LLMs can help attackers write obfuscated code, debug scripts, and generate polymorphic payloads that evade traditional detection tools. Some underground communities are even sharing jailbreak prompts and AI configurations specifically tuned for malicious code generation.

Combined with AI-driven reconnaissance, this creates a feedback loop where attackers can generate, deploy, and refine malware in real time.

Faster Intrusion Timelines

Using AI, attackers can breach organizations faster than ever – way faster than human analysts alone can respond. And, once inside the network, they can rapidly identify the path of least resistance by mapping out privileged accounts, vulnerable assets, and misconfigurations, optimizing their route to sensitive data.

AI in Ransomware Operations

Ransomware groups are also beginning to incorporate AI into their toolchains. AI can assist them in:

Target selection: Prioritizing victims based on potential payout or infrastructure weaknesses.

Evasion: Mutating ransomware samples to avoid signature-based tools.

Negotiation: Generating automated messages and responses to victims using generative AI chatbots.

Deepfakes and Voice Cloning for Impersonation Attacks

AI-powered voice and video cloning are enabling a new wave of high-impact impersonation attacks. In one documented case, attackers used voice cloning to impersonate a company executive during a call with finance staff, successfully requesting a fraudulent wire transfer.

This kind of synthetic media threat is no longer theoretical. As models become cheaper and easier to use, deepfake impersonation is becoming a realistic risk for high-profile and high-access individuals.

The bad news is: this is only the beginning. For more, check out our eBook: 3 Ways Cybercriminals Are Leveling Up with AI.

What are the Risks of AI in Cybersecurity?

AI is a powerful tool, but like any emerging technology, it brings significant risks. We can divide these risks into two categories: those introduced by external threat actors and those that arise internally. Understanding both sides is critical to implementing AI responsibly and securely.

Insider Risks

Even when used internally, AI introduces risks, especially if deployed without the right oversight or safeguards. These include:

Lack of explainability: AI driven systems that operate as black boxes can erode trust. If analysts can’t see why a step was taken – or validate it – they can’t improve it.

Decision drift: Without continuous tuning or feedback, AI models can degrade over time, misclassifying threats or ignoring new behaviors. Static generative models are particularly vulnerable here.

Hallucinations: Especially in LLM-based workflows, hallucinations can lead to false positives, mislabeling incidents, or even recommending/executing incorrect responses.

Overreliance on automation: Without human-in-the-loop guardrails, teams risk letting AI make decisions in sensitive contexts where nuance is critical, such as insider threats or hybrid infrastructure.

That said, as we outline in our AI agent whitepaper, agentic AI mitigates these issues through contextual learning and integrated oversight – ensuring AI learns from your environment and stays aligned with business risk.

External Risks

AI doesn’t just offer attackers strategic advantages; it also creates new targets. As security platforms adopt AI-driven workflows and decision-making, adversaries are finding ways to manipulate, disrupt, or exploit those systems directly. Key external risks include:

Prompt injection and manipulation: Threat actors craft inputs that trick LLMs into revealing sensitive data, bypassing restrictions, or making poor recommendations. These attacks can undermine AI-enabled investigation or triage.

AI poisoning attacks: By injecting malicious or misleading data into logs or telemetry, attackers can influence the training or reinforcement learning of defensive models, causing them to overlook real threats or misclassify benign behavior.

Exploitation of autonomous systems: As security platforms adopt agentic AI tools that take autonomous action, attackers may attempt to manipulate the logic itself, triggering containment workflows unnecessarily, diverting investigations, or forcing false positives to exhaust resources.

These risks underscore the need for transparent, governed AI. It’s crucial to build oversight and human validation into AI tools – particularly agentic AI models – ensuring that security teams can audit, refine, and align autonomous rules to risk.

Mitigating the Risks of AI in Cybersecurity

So, how can organizations mitigate these risks?

Reducing hallucinations with curated data and scope control

To minimize inaccurate or misleading outputs from LLMs, advanced platforms constrain AI access to curated, security-specific data such as normalized alerts, asset inventories, and known threat behaviors.

Enabling transparency through observability and auditability

One of the most significant risks with AI is the “black box” effect, when analysts don’t understand why a model made a certain decision. That’s why leading SecOps platforms now provide visibility into how AI models weigh input factors, which rules were triggered, and what alternatives were considered. This traceability ensures analysts can review, validate, and refine outputs over time.

Keeping data privacy intact through segmentation and relevance filtering

AI doesn’t need to know everything. By limiting input to only the relevant, security-approved data sources, platforms reduce exposure risk and maintain compliance with privacy mandates. The best platforms are designed to segment data by environment, tenant, and identity, ensuring AI insights are context-specific and privacy-conscious.

Building in human oversight and refinement loops

Even autonomous capabilities, AI shouldn’t operate without human guardrails. Security teams can deploy agentic AI models in the most advanced platforms with the option for human-in-the-loop validations, especially when containment or escalation decisions are involved. As analysts accept, reject, or edit AI recommendations, the system learns and adjusts accordingly.

The Future of AI in Cybersecurity

Artificial Intelligence in cybersecurity is no longer experimental; it’s foundational. But while many organizations are still exploring ML and basic automation, the most forward-thinking SecOps teams are already planning for what comes next: agentic AI, hyperautomation, and multi-agent collaboration.

Agentic AI Security

Most current security tools use AI to assist analysts: enriching alerts, flagging anomalies, or performing basic automation. Agentic AI takes this further. It doesn’t just exist, it acts.

Agentic AI systems are designed to:

Make decisions without human input

Take direct action to mitigate threats

Continuously learn from analyst feedback, infrastructure changes, and new threat behaviors

Whereas traditional AI requires predefined playbooks, agentic AI can dynamically assess risk and act based on real-time context. It doesn’t just follow rules, it adapts to your environment. This means:

Automatically isolating endpoints if lateral movement is detected

Resetting credentials if unusual login behavior is observed

Flagging suspicious behavior before damage is done, even when it doesn’t match known signatures

Hyperautomation

The goal of hyperautomation isn’t to replace humans; it’s to free them to do higher-value work. By automating every step of the threat lifecycle, from detection to containment, security teams can operate at scale without burning out analysts or overloading infrastructure.

Hyperautomation in modern SOCs includes:

Ingesting and correlating telemetry from multiple sources, including SIEM, EDR, firewalls, and identity tools

Triaging, classifying, and grouping related alerts

Executing complete response workflows without manual input

Surfacing only the alerts that require human judgment

When powered by agentic AI, hyperautomation becomes more than just orchestration. It evolves into decision-driven execution, where the platform doesn’t just move data, it understands and acts upon it.

According to Gartner, hyperautomation “is a business-driven, disciplined approach that organizations use to rapidly identify, vet and automate as many business and IT processes as possible.” Or, as stated by SAP, “Hyperautomation refers to the use of smart technologies to identify and automate as many processes as possible – as quickly as possible.”

For example, Advanced AI and ML algorithms can be combined with business process automation (BPA), robotic process automation (RPA), and more to bring intelligence to workflows where only automation was available before.

Multi-Agent Systems

Multi-agent systems are one of the most exciting developments in cybersecurity. These models involve multiple AI agents specializing in specific tasks and collaborating under a central orchestrator.

For example:

One agent may specialize in endpoint behavior analysis

Another may focus on cloud misconfigurations

A third may monitor identity signals across federated environments

These agents share data and observations with a central orchestrator agent, which synthesizes their input and makes holistic decisions. This layered structure ensures specialization without siloing, much like a high-performing SOC.

ReliaQuest is already laying the foundation for this future. As detailed in our eBook From Assistants to Agents, multi-agent frameworks enable:

Modular deployments

Parallel processing of complex threat scenarios

AI-driven collaboration that mirrors analyst workflows, but at machine speed

AI-Powered Security Operations with ReliaQuest GreyMatter

ReliaQuest has harnessed decades of security operations data to train generative AI and agentic AI models within its GreyMatter platform, making it uniquely suited for customers looking to augment their security operations teams. Pairing these AI capabilities with automation speeds threat detection, containment, investigation, and response even further, resulting in mean times to contain (MTTC) of 5 minutes or less for our customers.