What is an AI Agent?

An AI agent is a software system designed to autonomously complete a task or set of tasks within a defined scope. In the context of security operations, this could mean enriching alerts with asset data, correlating signals across tools, or generating analyst-ready summaries – all without needing to be prompted by a human.

Each AI agent has:

A defined role or objective: Like summarizing, enriching, or correlating

Access to relevant data sources: Such as telemetry, threat intel, or historical alerts

A decision-making capability: Based on rules, heuristics, or embedded models

The ability to act within the SOC ecosystem

Agentic AI vs Generative AI

AI agents differ from generative AI models like ChatGPT. LLMs are general-purpose tools that respond to inputs; they don’t initiate actions, track state, or work towards an outcome.

An AI agent may use a generative AI, for example, to help explain a complex decision, but the agent determines:

When to generate the summary

What context to include based on telemetry and prior alerts

Where to route the output

In other words, the generative AI is the component, and the agent is the actor.

What's the Difference Between Agentic AI and AI Agents?

An AI agent is like a specialized worker, while agentic AI is the system that manages and coordinates the entire team.

Where AI agents act independently to complete specific tasks, agentic AI is the architecture that coordinates many of these agents towards a shared outcome, such as reducing response times, improving detection fidelity, or validating incidents at scale.

In an agentic AI system:

Each agent has a defined task

Agents share context and hand off tasks, data, and analysis to one another dynamically

A central orchestrator agent manages task assignment, prioritization, and adapts the workflow in real time based on new data

Agentic AI systems are designed to learn and adapt, unlike traditional automation or static workflows. They can:

Route alerts to the most relevant agents based on context

Escalate incidents based on dynamic thresholds

Optimize based on what’s working and what isn’t

In mature environments, these systems are trained using an organization’s own telemetry and historical alerts, meaning every action is grounded in real-world conditions, not just static rules or vendor presets.

The key distinction is that AI agents carry out tasks, but agentic AI builds a system where those agents work together to deliver outcomes. This architecture allows security teams to move from task-level automation to outcome-driven execution – a clear example of how AI is changing cybersecurity from rule-based scripts to adaptive systems that learn and improve.

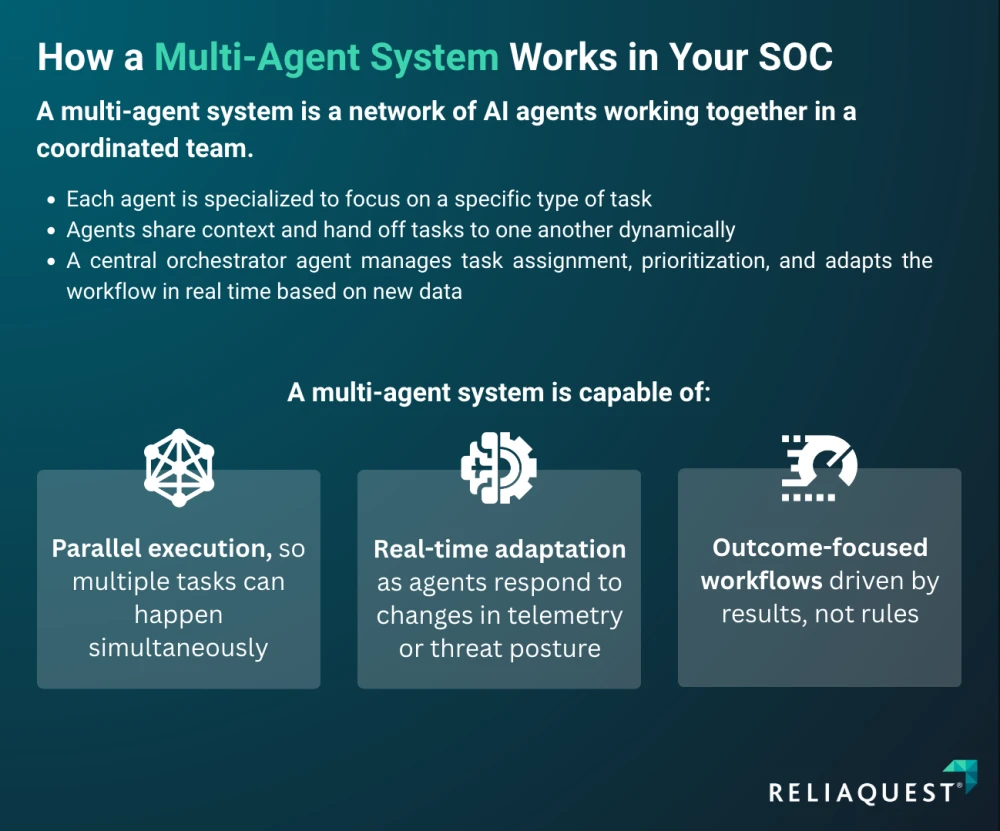

Multi-Agent Systems

A multi-agent system, sometimes called a multi-agent AI system, is a network of AI agents working together in a coordinated team.

Unlike traditional automation, which relies on rigid, sequential workflows, multi-agent systems are dynamic and adaptive. Each agent is specialized, but they interact continuously, sharing context and adjusting to new information in real time.

What sets multi-agent systems apart isn’t just the number of agents, it’s the structure that connects them. A supervisory AI called an orchestration agent sits at the center, assigning tasks, monitoring progress, and reallocating resources as priorities shift.

This architecture enables:

Parallel execution, so multiple tasks can happen simultaneously

Real-time adaptation, as agents respond to changes in telemetry or threat posture

Outcome-focused agentic AI workflows driven by results, not rules

This design is especially valuable in security operations. Threats evolve quickly, telemetry volumes are high, and context is often fragmented. A multi-agent system can continuously monitor and take action in an environment faster than a human can, without manual input. It can triage alerts, correlate data, and escalate incidents quickly and precisely while keeping humans in the loop and in control with transparent explanations of each step it takes.

What are AI SOC Agents?

AI SOC agents are specialized AI agents trained and deployed to operate inside the security operations center (SOC). Each one is assigned a specific, security-focused task – like alert triage, signal correlation, or contextual enrichment – and acts independently to complete it.

What makes them different from traditional security automation is their ability to:

Ingest telemetry and historical data

Reason across multiple inputs, such as threat intel, MITRE mappings, and asset criticality

Take proactive action, such as escalating a priority incident or suppressing a false positives

They are not static scripts or prebuilt playbooks. They’re domain-trained agents capable of adapting a SOC’s evolving environment, using feedback loops to refine how they act over time.

In more advanced implementations, these agents are:

Tuned on an organization’s own alert history and analyst workflows

Aligned to how that SOC prioritizes risk, context, and response

Connected to other agents through an AI orchestrator that decides which agent acts and when

This design shifts SOCs from alert-by-alert response to autonomous, coordinated execution. Agents not only handle specific tasks, but collaborate to drive outcomes like faster triage, better incident validation, and reduced analyst workload.

AI SOC Analyst

The role of the SOC analyst is evolving. As threat volumes grow and telemetry becomes more complex, analysts are under pressure to move faster – without sacrificing context or accuracy.

AI SOC agents help shift the analyst’s role from manual triage and investigation to high-value, judgement-driven.

Pre-triaged incidents, already correlated and scored for severity

Enriched context, drawn from internal telemetry, threat intel and asset data

AI-generated summaries, written in plain language, with citations and linked evidence

This reduces noise, limits alert fatigue, and shortens the time between detection response. It also unlocks time for analysts to do what matters most: threat modelling, hypothesis testing, tuning detections, and improving security posture.

Importantly, the analyst is never removed from the loop. AI SOC agents surface the “what” and “why,” but the analyst retains decision-making control – choosing how to respond, when to escalate, and what deserves deeper investigation.

The result is a more efficient, empowered SOC – where human expertise is amplified, not replaced.

What are the Benefits of Agents in Security Workflows?

AI SOC agents don’t just help security teams move faster – they help them move smarter.

By embedding intelligent agents into security workflows, organizations gain the ability to scale decision-making, reduce response times, and free up human analysts to focus on high-priority work.

Key benefits include:

Speed and consistency: Agents can ingest, correlate and prioritize alerts in real time – without fatigue, context switching, or delays. This dramatically shortens mean time to detect (MTTD) and respond (MTTR).

Proactive action: Unlike traditional automation, agents don’t wait for a human prompt. If an agent detects a threat that exceeds a certain threshold, it can escalate, suppress, or hand off to another agent immediately.

Workload reduction: By offloading noisy or repetitive tasks like initial triage, enrichment, and false positive filtering, agents reduce cognitive overload for analysts and limit alert fatigue.

Improved accuracy: Agents don’t follow static playbooks. They adapt based on context, pass tasks between one another, and adjust their approach in real time, all guided by a central orchestrator.

Explainability and trust: Modern SOC agents provide decision trails. Analysts can see what data was used, how a conclusion was reached, and what action the agent took, making outcomes transparent and auditable.

In short, AI agents let defenders act with greater speed, precision, and confidence, without sacrificing control or context.

Implementing AI SOC Agents in Security Workflows

Deploying AI agents is a layered enhancement of existing workflows, not a rip-and-replace process. The goal is to embed intelligence where it’s most needed.

Here’s what the process should look like:

1. Real-Time Threat Detection

AI agents continuously monitor telemetry from across the environment, including endpoints, cloud tools, the network, identities, and more. They’re trained to recognize meaningful deviations, not just match signatures. This enables:

Early detection of stealthy behavior across fragmented data

Prioritizing based on asset sensitivity, user behavior, and MITRE TTPs

Continuous tuning based on historical patterns and analyst feedback

2. Incident Response Automation

Once a potential threat is identified, autonomous AI agents handle the early response:

Correlating the alert with related signals across tools

Enriching with threat intel and known vulnerabilities

Determining whether to suppress, escalate, or assign to another alert

This eliminates swivel-chair investigations and allows the SOC to act in seconds, not hours.

3. Scalable Contextualization

AI agents can enrich each alert with data pulled from dozens of sources:

Configuration Management Databases (CMDBs), Identity and Access Management (IAM) platforms, Endpoint Detection and Response (EDR) tools, ticketing systems, and more.

Context about user identity, asset value, exposure risk, and threat history

Tailored narrative that explains “why this matters” in analyst-ready language

This ensures consistent, high-quality context even in high-volume environments.

4. Cross-System Correlation Without Manual Pivoting

Instead of analysts needing to pivot between consoles, agents act as a link:

Mapping alerts across tools using shared context

Inferring relationships between low-fidelity signals

Aligning correlated incidents to threat models like MITRE ATT&CK

The result is fewer duplicate tickets, clearer escalation paths, and more confident responses.

5. Reduced Manual Error and Analyst Burnout

AI agents follow every step of the playbook. They don’t forget to check asset ownership, look up IOC matches, or attach enrichment details. This consistency reduces exposure from missed signals and limits fatigue from repetitive tasks, giving analysts the bandwidth to focus on more high-value tasks.

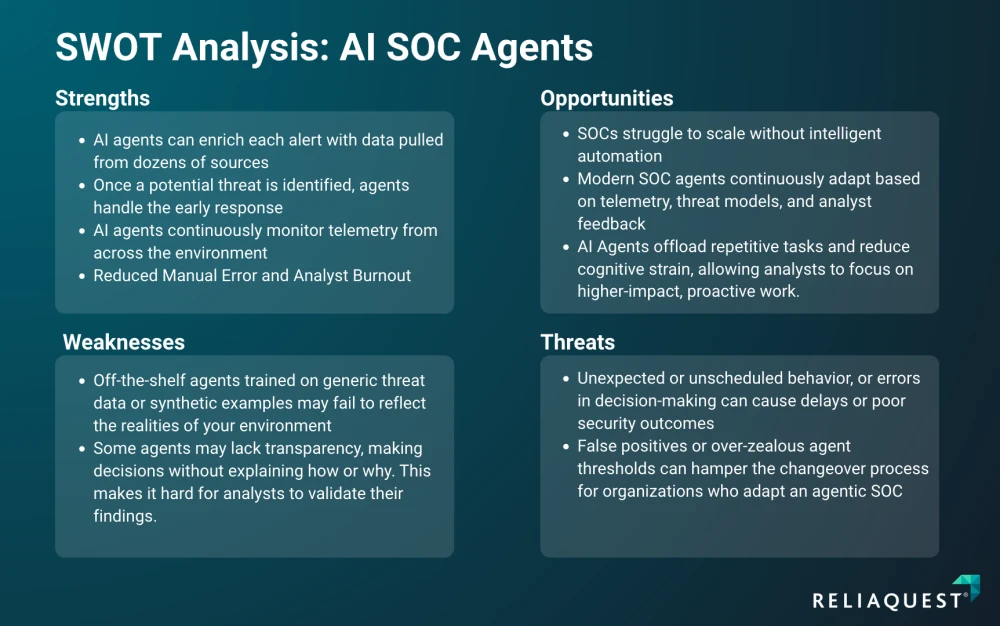

What are the Risks of AI Agents?

Autonomous AI agents are powerful, but they’re not infallible. Like any system that acts independently, they introduce new risks if not implemented with oversight and care. AI committees should consider the following risks when developing policies:

Overreliance on Generic Models

Off-the-shelf agents trained on generic threat data or synthetic examples may fail to reflect the realities of your environment. This misalignment leads to false positives, missed threats, or poor prioritization, especially when signals are nuanced or context-specific.

Limited Transparency

If agents make decisions without explaining how or why, it’s hard for analysts to validate their findings. This erodes trust and makes it difficult to justify actions during audits or post-incident reviews. Transparency must be built in from the start, showing what data was used, how a conclusion was reached, and what triggered escalation.

Faulty Feedback Loops

Agents that learn from analyst feedback can evolve for better or worse. If the data being reinforced is biased, incomplete, or inconsistent, the agent may drift from desired behavior over time.

Unchecked Automation

AI agents act without being prompted. If improperly scoped or loosely configured, they can take action that’s out of step with current priorities, escalating low-priority incidents, suppressing relevant alerts, or missing subtle lateral movement.

How are Threat Actors Leveraging AI?

It’s also important to note that AI isn’t just a tool for defenders. Threat actors are already adopting AI to improve scale, deception, and speed.

AI Augmented Phishing and Deepfakes

Attackers are using AI tools to generate hyper-personalized phishing lures. By scraping public data and simulating human tone, AI can craft convincing messages at scale. Some groups are even using deepfake audio and video to impersonate executives or employees, enhancing the effectiveness of social engineering attacks.

Weaponized LLMS

ReliaQuest researchers have observed threat actors fine-tuning open-source LLMs to generate malicious code, write convincing business emails, or impersonate support agents. These models can automate initial access attempts or create realistic-looking infrastructure, such as fake login pages.

In some cases, attackers deploy their own private LLMs to avoid detection, bypass usage restrictions, and customize them for their specific playbooks.

Autonomous Attack Chains and Agentic AI

ReliaQuest researchers have also observed attacker workflows moving toward agentic AI, with autonomous systems that can scan for vulnerabilities, generate payloads, test defenses, and deploy attacks in coordinated chains.

These malicious agents can:

Chain tasks without human intervention

Dynamically adapt to network defenses

Exploit exposed APIs, misconfigurations, or weak identity infrastructure

While many of these systems are still in early-stage experimentation, the threat is real and growing.

Cryptocurrency and Money Laundering

Cybercriminals are using AI to automate money laundering by mixing services and smart contracts. These tools can analyze blockchain patterns, find the least risky paths, and obfuscate transactions at scale.

What is the Future of AI SOC Agents?

Future SOCs won’t just use AI, they will rely on it. As environments for more complex and threats more dynamic, AI agents will be critical for keeping pace.

The Cost of Inaction

The biggest risk facing security teams isn’t AI failing, it’s failing to adopt AI at all. Without intelligent automation, SOCs struggle to scale. Analysts remain stuck in manual triage, while threat actors accelerate with AI-powered tooling. Defenders must match the speed, creativity, and automation of their adversaries or risk falling behind.

Proactive Learning and Adaptation

Modern SOC agents aren’t static scripts. They continuously adapt based on telemetry, threat models, and analyst feedback. When grounded in historical data and validated through human-in-the-loop feedback, agents reduce false positives and surface high-fidelity incidents faster.

Driving Threat Hunting and Hypothesis Testing

AI agents can go beyond basic triage – clustering signals, pre-building timelines, and surfacing suspicious behavior. As orchestration improves, analysts will be able to ask questions like: “What tactics have we seen this actor use in our environment over the last 90 days?” and receive an AI-generated summary in seconds.

Cost Efficiency and Talent Leverage

AI SOC agents enhance, rather than replace human analysts. By offloading repetitive tasks and reducing cognitive strain, they give analysts the capacity to focus on higher-impact work like threat modeling, detection tuning, and proactive defense. This shift not only improves security outcomes but also lowers the cost per incident and helps lean teams scale their impact.

Increased Use

The industry is moving fast. Gartner reported a 750% increase in AI-agent-related inquiries between Q2 and Q4 of 2024, naming agentic AI one of the biggest trends of the year - a clear sign that organizations are prioritizing agentic systems to gain a competitive edge.

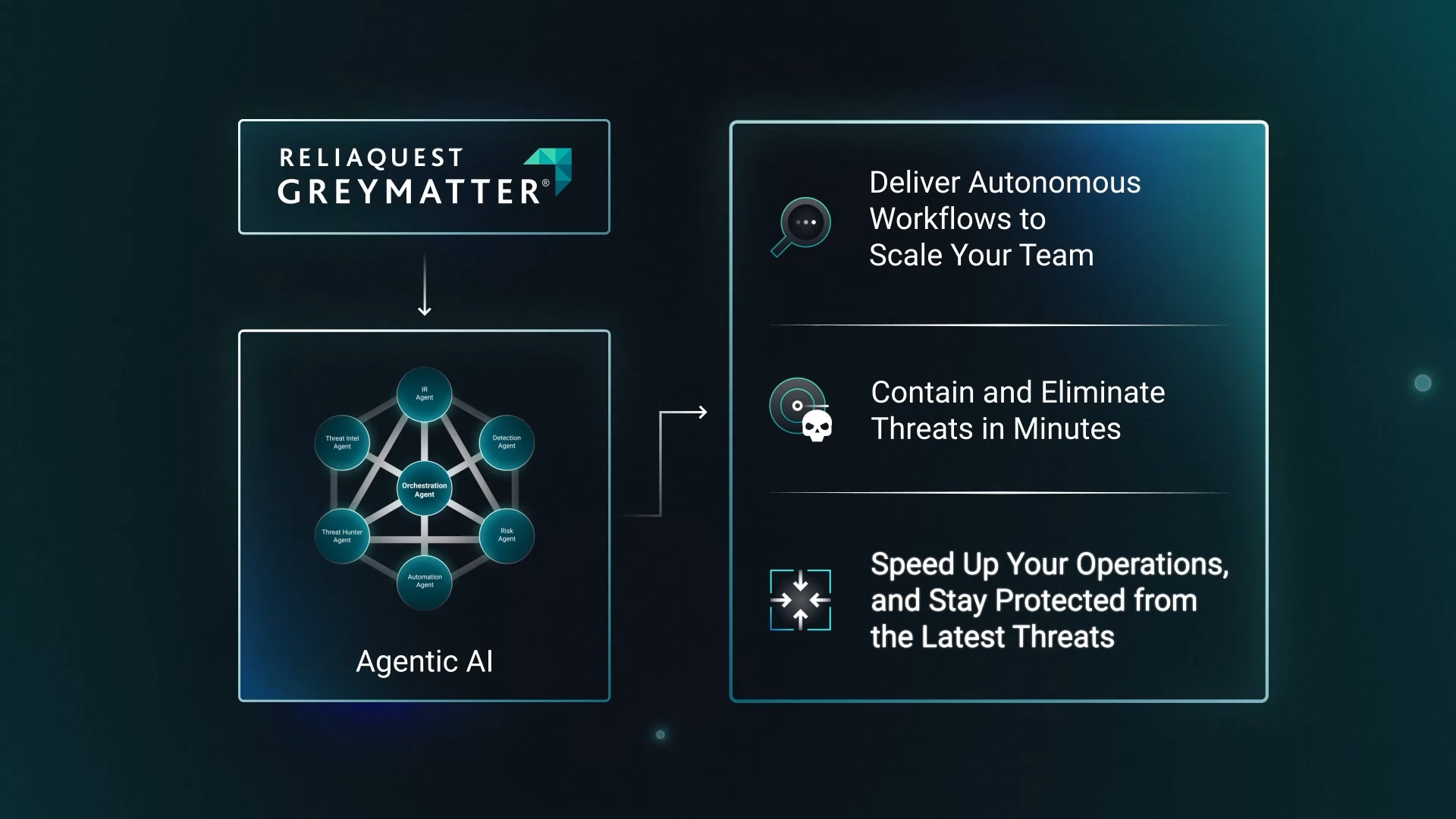

Multi-Agent Security Technology

See how autonomous agents work together inside a modern SOC. This video shows how ReliaQuest's AI orchestrator agent coordinates with specialized AI agents in GreyMatter to detect, enrich, and escalate threats in real time, turning telemetry into action with minimal human input. This multi-agent security technology complements the architecture outlined above and shows what agentic execution looks like in practice.